Add These 10 Mangets To Your Deepseek

페이지 정보

본문

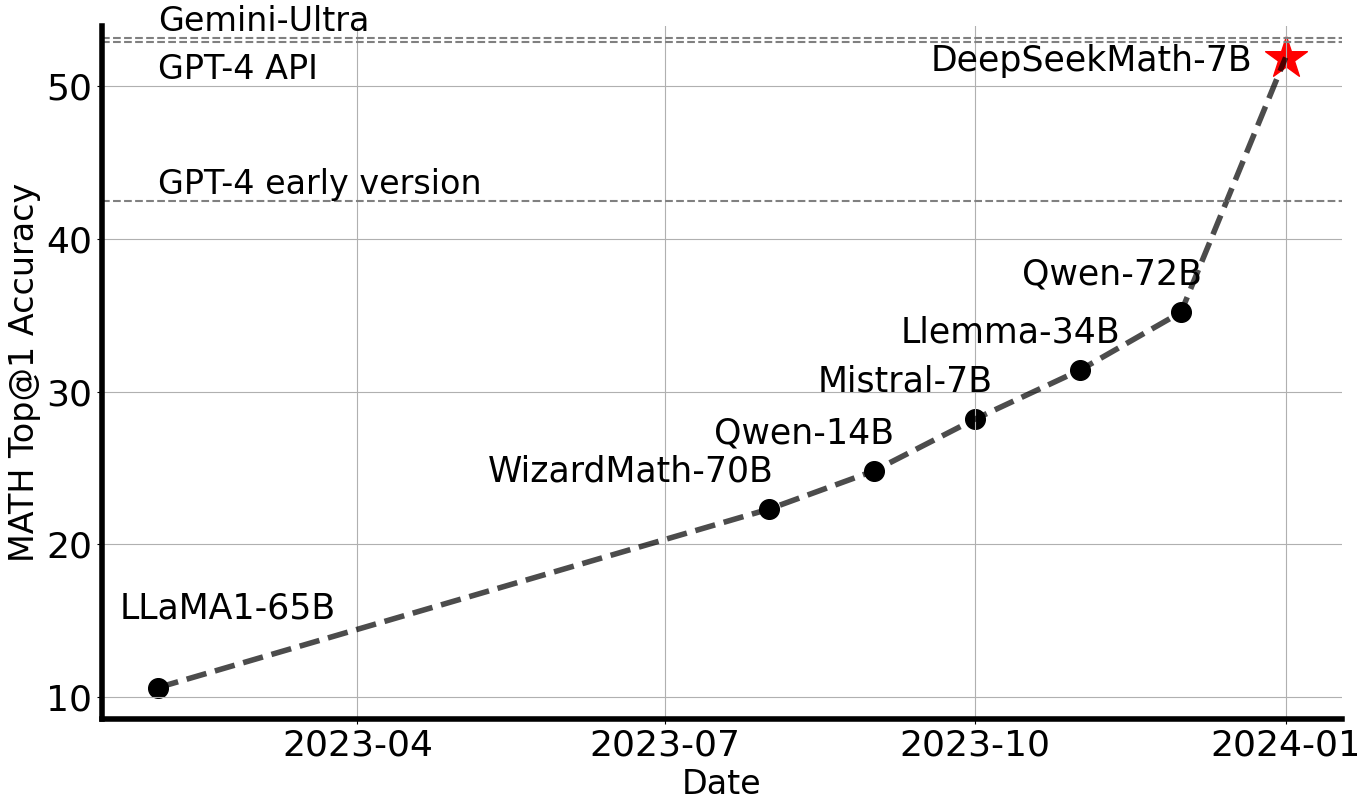

• We introduce an modern methodology to distill reasoning capabilities from the lengthy-Chain-of-Thought (CoT) model, particularly from one of many DeepSeek R1 sequence models, into customary LLMs, significantly DeepSeek-V3. Despite its glorious efficiency, DeepSeek-V3 requires solely 2.788M H800 GPU hours for its full training. For instance, a 175 billion parameter mannequin that requires 512 GB - 1 TB of RAM in FP32 could potentially be lowered to 256 GB - 512 GB of RAM by utilizing FP16. You can use GGUF models from Python using the llama-cpp-python or ctransformers libraries. They're additionally suitable with many third party UIs and libraries - please see the record at the highest of this README. Chinese AI startup DeepSeek launches DeepSeek-V3, a large 671-billion parameter mannequin, shattering benchmarks and rivaling prime proprietary methods. Likewise, the corporate recruits people without any pc science background to assist its expertise understand other subjects and data areas, including being able to generate poetry and carry out well on the notoriously tough Chinese college admissions exams (Gaokao). Such AIS-linked accounts were subsequently discovered to have used the access they gained by way of their rankings to derive data essential to the production of chemical and biological weapons. Upon getting obtained an API key, you'll be able to access the DeepSeek API utilizing the following instance scripts.

Make sure you might be using llama.cpp from commit d0cee0d or later. Companies that almost all successfully transition to AI will blow the competition away; a few of these corporations could have a moat & continue to make excessive profits. R1 is important because it broadly matches OpenAI’s o1 model on a variety of reasoning duties and challenges the notion that Western AI corporations hold a significant lead over Chinese ones. Compared with DeepSeek-V2, we optimize the pre-training corpus by enhancing the ratio of mathematical and programming samples, whereas expanding multilingual coverage beyond English and Chinese. But Chinese AI improvement firm DeepSeek has disrupted that notion. Second, when DeepSeek developed MLA, they needed to add different things (for eg having a bizarre concatenation of positional encodings and no positional encodings) beyond simply projecting the keys and values because of RoPE. Super-blocks with sixteen blocks, every block having sixteen weights. K - "sort-0" 3-bit quantization in tremendous-blocks containing 16 blocks, every block having 16 weights. K - "kind-1" 2-bit quantization in super-blocks containing sixteen blocks, every block having sixteen weight. K - "type-1" 5-bit quantization. It doesn’t inform you the whole lot, and it won't keep your information safe.

Make sure you might be using llama.cpp from commit d0cee0d or later. Companies that almost all successfully transition to AI will blow the competition away; a few of these corporations could have a moat & continue to make excessive profits. R1 is important because it broadly matches OpenAI’s o1 model on a variety of reasoning duties and challenges the notion that Western AI corporations hold a significant lead over Chinese ones. Compared with DeepSeek-V2, we optimize the pre-training corpus by enhancing the ratio of mathematical and programming samples, whereas expanding multilingual coverage beyond English and Chinese. But Chinese AI improvement firm DeepSeek has disrupted that notion. Second, when DeepSeek developed MLA, they needed to add different things (for eg having a bizarre concatenation of positional encodings and no positional encodings) beyond simply projecting the keys and values because of RoPE. Super-blocks with sixteen blocks, every block having sixteen weights. K - "sort-0" 3-bit quantization in tremendous-blocks containing 16 blocks, every block having 16 weights. K - "kind-1" 2-bit quantization in super-blocks containing sixteen blocks, every block having sixteen weight. K - "type-1" 5-bit quantization. It doesn’t inform you the whole lot, and it won't keep your information safe.

After all they aren’t going to inform the whole story, but perhaps fixing REBUS stuff (with associated cautious vetting of dataset and an avoidance of too much few-shot prompting) will really correlate to meaningful generalization in fashions? Take heed to this story a company based in China which goals to "unravel the mystery of AGI with curiosity has launched DeepSeek LLM, a 67 billion parameter mannequin trained meticulously from scratch on a dataset consisting of 2 trillion tokens. The corporate additionally launched some "deepseek ai china-R1-Distill" fashions, which are not initialized on V3-Base, but instead are initialized from other pretrained open-weight fashions, together with LLaMA and Qwen, then wonderful-tuned on synthetic information generated by R1. Models are launched as sharded safetensors files. This repo incorporates GGUF format model recordsdata for DeepSeek's Deepseek Coder 1.3B Instruct. These files have been quantised using hardware kindly provided by Massed Compute. First, we tried some models using Jan AI, which has a nice UI. From a extra detailed perspective, we compare DeepSeek-V3-Base with the other open-source base models individually.

A more speculative prediction is that we will see a RoPE substitute or a minimum of a variant. Will macroeconimcs restrict the developement of AI? Rust ML framework with a focus on efficiency, together with GPU support, and ease of use. Building upon extensively adopted methods in low-precision coaching (Kalamkar et al., 2019; Narang et al., 2017), we suggest a mixed precision framework for FP8 training. Through the assist for FP8 computation and storage, we achieve each accelerated coaching and decreased GPU memory utilization. Lastly, we emphasize again the economical coaching costs of DeepSeek-V3, summarized in Table 1, achieved by way of our optimized co-design of algorithms, frameworks, and hardware. Which LLM mannequin is greatest for generating Rust code? This a part of the code handles potential errors from string parsing and factorial computation gracefully. 1. Error Handling: The factorial calculation may fail if the input string can't be parsed into an integer. We ran multiple giant language fashions(LLM) domestically in order to determine which one is the most effective at Rust programming. Now now we have Ollama running, let’s try out some fashions.

A more speculative prediction is that we will see a RoPE substitute or a minimum of a variant. Will macroeconimcs restrict the developement of AI? Rust ML framework with a focus on efficiency, together with GPU support, and ease of use. Building upon extensively adopted methods in low-precision coaching (Kalamkar et al., 2019; Narang et al., 2017), we suggest a mixed precision framework for FP8 training. Through the assist for FP8 computation and storage, we achieve each accelerated coaching and decreased GPU memory utilization. Lastly, we emphasize again the economical coaching costs of DeepSeek-V3, summarized in Table 1, achieved by way of our optimized co-design of algorithms, frameworks, and hardware. Which LLM mannequin is greatest for generating Rust code? This a part of the code handles potential errors from string parsing and factorial computation gracefully. 1. Error Handling: The factorial calculation may fail if the input string can't be parsed into an integer. We ran multiple giant language fashions(LLM) domestically in order to determine which one is the most effective at Rust programming. Now now we have Ollama running, let’s try out some fashions.

- 이전글Create A Deepseek Your Parents Could be Happy with 25.02.01

- 다음글Why Most individuals Will never Be Nice At Deepseek 25.02.01

댓글목록

등록된 댓글이 없습니다.